Tesla expects gigafactories to run below capacity through 2022

April 21, 2022

Tesla blames inflation, cost pressure from suppliers for increased car prices

April 21, 2022

As humanity prepares to return to the moon (“to stay,” as they remind us constantly), there’s a lot of infrastructure that needs to be built to make sure astronauts are safe and productive on the lunar surface. Without GPS, navigation and mapping is a lot harder — and NASA is working with lidar company Aeva to create a tool that scans the terrain when ordinary cameras and satellite instruments won’t cut it.

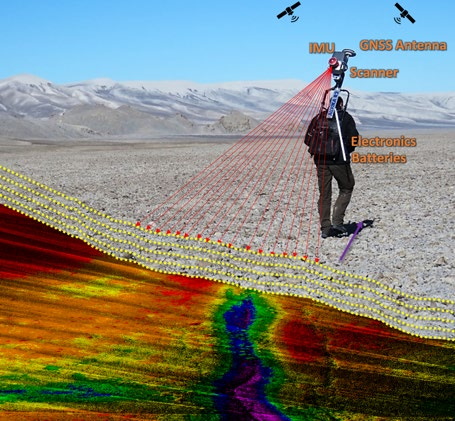

The project is called KNaCK, or Kinematic Navigation and Cartography Knapsack, and it’s meant to act as a sort of hyperaccurate dead reckoning system based on simultaneous location and mapping (SLAM) concepts.

This is necessary because for now, we have no GPS-type tech on the moon, Mars or any other planet, and although we have high-resolution imagery of the surface from orbit, that’s not always enough to navigate by. For example, at the south pole of the moon, the fixed angle of the sun results in there being deep shadows that are never illuminated and brightly baked highlights that you need to careful how you look at. This area is a target for lunar operations due to a good deal of water below the surface, but we just don’t have a good idea of what the surface looks like in detail.

Lidar provides an option for mapping even in darkness or bright sunlight, and it’s already used in landers and other instruments for this purpose. What NASA was looking for, however, was a unit small enough to be mounted on an astronaut’s backpack or to a rover, yet capable of scanning the terrain and producing a detailed map in real time — and determining exactly where it was in it.

Concept image of a backpack-mounted lidar. Image Credits: NASA

Concept image of a backpack-mounted lidar. Image Credits: NASA

That’s what NASA on for the last couple years, funded through the Space Technology Mission Directorate’s “Early Career Initiative,” which since its launch in 2019 aims to “Invigorate NASA’s technological base and best practices by partnering early career NASA leaders with world class external innovators.” In this case that innovator is Aeva, which is better known for its automotive lidar and perception systems.

Aeva has an advantage over many such systems in the fact that its lidar, in addition to capturing the range of a given point, will also capture its velocity vector. So when it scans a street, it knows that one shape is moving towards it at 30 MPH, while another is moving away at five MPH and others are standing still relative to the sensor’s own movement. This, as well as its use of frequency-modulated continuous wave tech instead of flash or other lidar methods, means it is robust to interference from bright sunlight.

Luckily, light works the same, for the most part anyway, on the moon as it does here on Earth. The lack of atmosphere does change some things a bit, but for the most part it’s more about making sure the tech can do its thing safely.

“There’s no need to change the wavelengths or spectrum or anything like that. FMCW allows us to get the performance we need, here or anywhere else,” said Aeva CEO Soroush Salehian. “The key is hardening it, and that’s something we’re working with NASA and their partners on.”

“Because we’ve packaged all our elements into this little gold box, it means that part of the system isn’t susceptible to things going on because of a change in atmospheric conditions, like vacuum conditions; that box is sealed permanently, which allows that hardware to be applicable to space applications as well as terrestrial applications,” explained James Reuther, VP of technology at Aeva.

It still requires some changes, he noted:

Making sure we’re good in a vacuum, making sure we have a way to thermally reject the heat the system generates, and tolerating the shock and vibe during launch, and proving out the radiation environment.

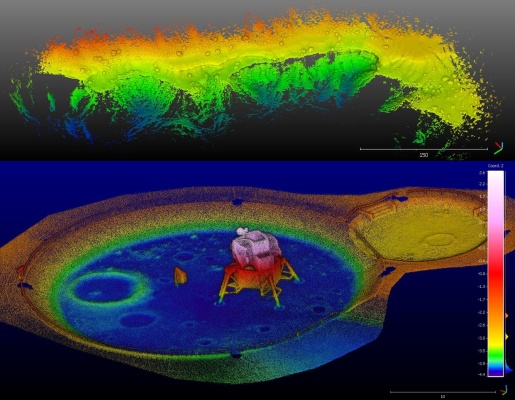

The results are pretty impressive — the 3D reconstruction of the moon landing exhibit in the top image was captured in just 23 seconds of collection by walking around with a prototype unit. (The larger landscape was a bit more of a trek.)

NASA scientists are out there testing the technology right now. “Out there” as in the project lead, Michael Zanetti, emailed me from the desert:

The project is progressing excellently. The KNaCK project is currently (that is right now, today and this week) in the desert in New Mexico field testing the hardware and software for science data collection and simulated lunar and planetary surface exploration mission operations. This is with a team of scientists and engineers from NASA’s Solar System Exploration Research Virtual Institute (SSERVI) RISE2 and GEODES teams. We’re collecting data with Aeva’s FMCW-LiDAR to make 3D maps of the geologic outcrops here (to make measurements of slope, trafficability, general morphology), and to evaluate how mission operations can make use of person-mounted LiDAR systems for situational awareness.

And here they are:

Image Credits: NASA

Image Credits: NASA

Zanetti said they’ll also soon be testing the Aeva lidar unit on rover prototypes and in a large simulated regolith sandbox at Marshall Space Flight Center. After all, a tech suitable for autonomous driving here on Earth may very well be so for the moon as well.

An interesting related application for this type of lidar in lander and rover situations is in detecting and characterizing clouds of dust. This could be used for evaluating environmental conditions, or estimating the speed and turbulence of a landing, and other things — one thing we know for sure is it makes for a cool-looking point cloud:

Image Credits: NASA

Image Credits: NASA

Once completed, KNaCK should be able to simultaneously map an astronaut’s surroundings in real time and tell them where they are and how fast they’re going. This would all feed into a larger system, of course, being relayed back to a lander, up to an orbiter and so on.

All that is TBD, of course, while they hammer out the basics of this promising but still early-stage system. Expect to hear more as we get closer to actual lunar operations — still a few years out.