NHTSA probes Tesla Autopilot crash that killed three people

May 21, 2022

The Station: All the news out of TC Sessions: Mobility, #PoorElon and a sobering NHTSA report

May 24, 2022

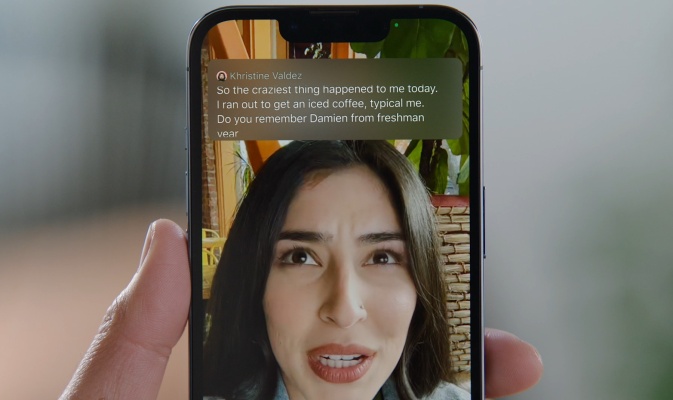

Apple has released a bevy of new accessibility features for iPhone, Apple Watch and Mac, including a universal live captioning tool, improved visual and auditory detection modes, and iOS access to WatchOS apps. The new capabilities will arrive “later this year” as updates roll out to various platforms.

The most widely applicable tool is probably live captioning, already very popular with tools like Ava, which raised $10 million the other day to expand its repertoire.

Apple’s tool will perform a similar function to Ava’s, essentially allowing any spoken content a user encounters to be captioned in real time, from videos and podcasts to FaceTime and other calls. FaceTime in particular will get a special interface with a speaker-specific scrolling transcript above the video windows.

The captions can be activated via the usual accessibility settings, and quickly turned on and off or the pane in which they appear expanded or contracted. And it all occurs using the device’s built-in ML acceleration hardware, so it works when you have spotty or zero connectivity and there’s no privacy question.

This feature may very well clip the wings of independent providers of similar services, as often happens when companies implement first-party versions of traditionally third-party tools, but it may also increase quality and competition. Having a choice between several providers isn’t a bad thing, and users can easily switch between them if, as seems likely to be the case, Apple’s solution is best for FaceTime while another, like Ava, might excel in other situations. For instance, Ava lets you save transcripts of calls for review later — not an option for the Apple captions, but definitely useful in work situations.

Image Credits: Apple

Image Credits: Apple

Apple Watch apps will get improved accessibility on two fronts. First there are some added hand gestures for people, for instance amputees, who have trouble with the finer interactions on the tiny screen. A pile of new actions available via gestures like a “double pinch,” letting you pause a workout, take a photo, answer a phone call and so on.

Second, WatchOS apps can now be mirrored to the screens of iPhones, where other accessibility tools can be used. This will also be helpful for anyone who likes the smartwatch-specific use cases of the Apple Watch but has difficulty interacting with the device on its own terms.

Existing assistive tools Magnifier and Sound Recognition also get some new features. Magnifier will be getting a “detection mode” later this year that combines several description tools in one place. People detection in Magnifier already lets the user know if either a person or something readable or describable is right in front of them: “person, 5 feet ahead.” Now there will also be a special “door detection” mode that gives details of those all-important features in a building. (I slightly mischaracterized these app modes earlier and this paragraph has been updated for accuracy.)

Image Credits: Apple

Image Credits: Apple

Door mode, which like the others can be turned on automatically or deactivated at will, lets the user know if the phone’s camera can see a door ahead, how far away it is, whether it’s open or closed and any pertinent information posted on it, like a room number or address, whether the shop is closed or if the entrance is accessible. These two detection modes and Image Descriptions will all go in a new unified Detection Mode coming to Magnifier later.

Sound recognition is a useful option for people with hearing impairments who want to be alerted when, say, the doorbell rings or the oven beeps. While the feature previously had a library of sound types it worked with, users can now train the model locally to pick up on noises peculiar to their household. Given the variety of alarms, buzzers and other noises we all encounter regularly, this should be quite helpful.

Lastly is a thoughtful feature for gaming called Buddy Controller. This lets two controllers act as one so that one person can play a game with the aid of a second, should it be difficult or stressful to do it all themselves. Given the complexity of some games even on mobile, this could be quite helpful. Sometimes I wish I had a gaming partner with a dedicated controller just so I don’t have to deal with some game’s wonky camera.

There are a number of other, smaller updates, such as adjusting the time Siri waits before answering a question (great for people who speak slowly) and extra text customization options in Apple Books. And VoiceOver is coming to 20 new languages and locations soon as well. We’ll know more about exact timing and availability when Apple makes more specific announcements down the line.

A quarter of a century later, Quake gets modern accessibility features