Teenage Engineering teases the tape recorder of my dreams, only it’s digital

May 12, 2023

Google’s Pixel Tablet ships with its own speaker dock

May 13, 2023

I’m back in the South Bay this week, banging away at an introduction in the hotel lobby a few minutes before our crew heads to Shoreline for Google I/O. There’s a guy behind in a business suit and sockless loafers, taking a loud business meeting on his AirPods. It’s good to be home.

I’ve got a handful of meetings lined up with startups and VCs and then a quiet, robot-free day and a half in Santa Cruz for my birthday. Knowing I was going to be focused on this developer even all day, I made sure to line some stuff up for the week. Turns out I lined up too much stuff – which is good news for all of you.

In addition to the usual roundup and job openings, I’ve got two great interviews for you.

Two weeks back, I posted about a bit of digging around I was doing in the old MIT pages – specifically around the Leg Lab. It included this sentence, “Also, just scrolling through that list of students and faculty: Gill Pratt, Jerry Pratt, Joanna Bryson, Hugh Herr, Jonathan Hurst, among others. Boy howdy.”

After that edition of Actuator dropped, Bryson noted on Twitter,

Boy howdy?

I never worked on the robots, but I liked the lab culture / vibe & meetings. Marc, Gill & Hugh were all welcoming & supportive (I never got time to visit Hugh’s version though). My own supervisor (Lynn Stein) didn’t really do labs or teams.

I discovered subsequent to publishing that I may well be the last person on Earth saying, “Boy Howdy” who has never served as an editor at Creem Magazine (call me). A day or two before, a gen-Z colleague was also entirely baffled by the phrase. It’s one in a growing list of archaic slang terms that have slowly ingratiated themselves into my vernacular, and boy howdy, am I going to keep using it.

As far as the second (and substantially more relevant) bit of the tweet, Bryson might be the one person on my initial list who I had never actually interacted with at any point. Naturally, I asked if she’d be interested in chatting. As she noted her tweet, she didn’t work directly with the robots themselves, but her work has plenty of overlap with that world.

Bryson currently serves as the Professor of Ethics and Technology at the Hertie School in Berlin. Prior to that, she taught at the the University of Bath and served as a research fellow at Oxford and the University of Nottingham. Much of her work focuses on artificial and natural intelligence, including ethics and governance in AI.

Given all talk around generative AI, the recent open letter and Geoffrey Hinton’s recent exit from Google, you couldn’t ask for better timing. Below is an excerpt from the conversation we recently had during Bryson’s office hours.

Q&A Joanna Bryson

Image Credits: Hertie School

You must be busy with all of this generative AI news bubbling up.

I think generative AI is only part of why I’ve been especially busy. I was super, super busy from 2015 to 2020. That was when everybody was writing their policy. I also was working part-time because my partner had a job in New Jersey. That was a long way from Bath. So, I cut back to half time and was paid 30%. Because I was available, and people were like, “we need to figure out our policy,” I was getting flown everywhere. I was hardly ever at home. It seems like it’s been more busy, but I don’t know how much of that is because of [generative AI].

Part of the reason I’m going to this much detail is that for a lot of people, this is on their radar for the first time for some reason. They’re really wrapped up in the language thing. Don’t forget, in 2017, I did a language thing and people were freaked out by that too, and was there racism and sexism in the word embeddings? What people are calling “generative AI” – the ChatGPT stuff – the language part on that is not that different. All the technology is not all that different. It’s about looking at a lot of exemplars and then figuring out, given a start, what things are most likely coming next. That’s very related to the word embeddings, which is for one word, but those are basically the puzzle pieces that are now getting stuff together by other programs.

I write about tech for a living, so I was aware of a lot of the ethical conversations that were happening early. But I don’t think the most people were. That’s a big difference. All of the sudden your aunt is calling you to ask about AI.

I’ve been doing this since the 80s, and every so often, something would happen. I remember when the web happened, and also when it won chess, when it won Go. Every so often that happens. When you’re in those moments, it’s like, “oh my gosh, now people finally get AI.” We’ve known about it since the 30s, but now we keep having these moments. Everyone was like, “oh my god, nobody could have anticipated this progress and Go.” Miles Brundage showed during his PhD that it’s actually linear. We could have predicted within the month when it was going to pass human competence.

Is there any sense in which this hype bubble feels different from previous?

Hertie School was one of the first places to come out with policy around generative AI. At the beginning of term, I said this new technology is going to come in, in the middle of the semester. We’ll get through it, but it’s going to be different at the end than it was at the beginning. In a way, it’s been more invisible than that. I think probably the students are using it extensively, but it isn’t as disruptive as people think, so far. […] I think part of the issue with technological change is everyone thinks that leads to unemployment and it doesn’t.

The people who have been made most unemployed are everybody in journalism — and not by replacing them but rather by stealing their revenue source, which was advertising. It’s a little flippant, but actually there is this whole thing about telephone operators. They were replaced by simple switches. That was the period when it switched to being more women in college than men, and it was because they were mostly women’s jobs. We got the more menial jobs that were being automated. […]

This is James Bessen’s research. Basically what happens is you bring in a technology that makes it easier to do some task, and then you wind up hiring more people for that task, because they’re each more valuable. Bank tellers were one of the early examples that people talked about, but this has been true in weaving and everything else. Then you get this increase in hiring and then you finally satiate. At some point, there’s enough cloth, there’s enough financial services, and then any further automation does a gradual decline in the number of people employed in that sector. But it’s not an overnight thing like people think.

You mention these conversations you were having years ago around setting guidelines. Were the ethical concerns and challenges the same as now? Or have they shifted over time?

There’s two ways to answer that question: what were the real ethical concerns they knew they had? If a government is flying you out, what are they concerned about? Maybe losing economic status, maybe losing domestic face, maybe losing security. Although, a lot of the time people think of AI as the goose that laid the golden egg. They think cyber and crypto are the security, when they’re totally interdependent. They’re not the same thing, but they rely on each other.

It drove me nuts when people said, “Oh, we have to rewrite the AI because nobody had been thinking about this.” But that’s exactly how I conceived of AI for decades, when I was giving all of these people advice. I get that bias matters, but it was like if you only talked about water and didn’t worry about electricity and food. Yes, you need water, but you need electricity and food, too. People decided, “Ethics is important and what is ethics? It’s bias.” Bias is a subset of it.

What’s the electricity and what’s the food here?

One is employment and another is security. A lot of people are seeing more how their jobs are going to change this time, and they’re afraid. They shouldn’t be afraid of that so much because of the AI — which is probably going to make our jobs more interesting — but because of climate change and the kinds of economic threats we’re under. This stuff will be used as an excuse. When do people get laid off? They get laid off when the economy is bad, and technology is just an excuse there. Climate change is the ultimate challenge. The digital governance crisis is a thing, and we’re still worrying about if democracy is sustainable in a context where people have so much influence from other countries. We still have those questions, but I feel like we’re getting on top of them. We have to get on top of them as soon as possible. I think that AI and a well-governed digital ecosystem help us solve problems faster.

I’m sure you know Geoffrey Hinton. Are you sympathetic with his recent decision to quit Google?

I don’t want to criticize Geoff Hinton. He’s a friend and an absolute genius. I don’t think all the reasons for his move are public. I don’t think it’s entirely about policy, why he would make this decision. But at the same time, I really appreciate that he realizes that now is a good time to try to help people. There are a bunch of people in machine learning who are super geniuses. The best of the best are going into that. I was just talking to this very smart colleague, and we were saying that 2012 paper by Hinton et al. was the biggest deal in deep learning. He’s just a super genius. But it doesn’t matter how smart you are — we’re not going to get omniscience.

It’s about who has done the hard work and understood economic consequences. Hinton needs to sit down as I did. I went to a policy school and attended all of the seminars. It was like, “Oh, it’s really nice, the new professor keeps showing up,” but I had to learn. You have to take the time. You don’t just walk into a field and dismiss everything about it. Physicists used to do that, and now machine learning people are doing that. They add noise that may add some insight, but there are centuries of work in political science and how to govern. There’s a lot of data from the last 50 years that these guys could be looking at, instead of just guessing.

There are a lot of people who are sending up alarms now.

So, I’m very suspicious about that too. On the one hand, a bunch of us noticed there were weird things. I got into AI ethics as a PhD student at MIT, just because people walked up to me and said things that sounded completely crazy to me. I was working on a robot that didn’t work at all, and they’d say, “It would be unethical to unplug that.” There were a lot of working robots around, but they didn’t look like a person. The one that looked like a person, they thought they had an obligation to.

I asked them why, and they said, “We learned from feminism that the most unlikely things can turn out to be people.” This is motors and wires. I had multiple people say that. It’s hard to derail me. I was a programmer trying not to fail out of MIT. But after it happened enough times, I thought, this is really weird. I’d better write a paper about it, because if I think it’s weird and I’m at MIT, it must be weird. This was something not enough people were talking about, this over-identification with AI. There’s something weird going on. I had a few papers I’d put out every four years, and finally, after the first two didn’t get read, the third one I called “Robots Should be Slaves,” and then people read it. Now all of a sudden I was an AI expert.

There was that recent open letter about AI. If pausing advancements won’t work, is there anything short-term that can be done?

There are two fundamental things. One is, we need to get back to adequately investing in government, so that the government can afford expertise. I grew up in the ’60s and ’70s, when the tax rate was 50% and people didn’t have to lock their doors. Most people say the ’90s [were] okay, so going back to Clinton-level tax rates, which we were freaked out by at the time. Given how much more efficient we are, we can probably get by with that. People have to pay their taxes and cooperate with the government. Because this was one of the last places where America was globally dominant, we’ve allowed it to be under-regulated. Regulation is about coordination. These guys are realizing you need to coordinate, and they’re like “stop everything, we need to coordinate.” There are a lot of people who know how to coordinate. There are basic things like product law. If we just set enough enforcement in the digital sector, then we would be okay. The AI act in the EU is like the most boring thing ever, but it’s so important, because they’re saying we noticed that digital products are products and it’s particularly important to enforcement when you have a system that’s automatically making decisions that affect human lives.

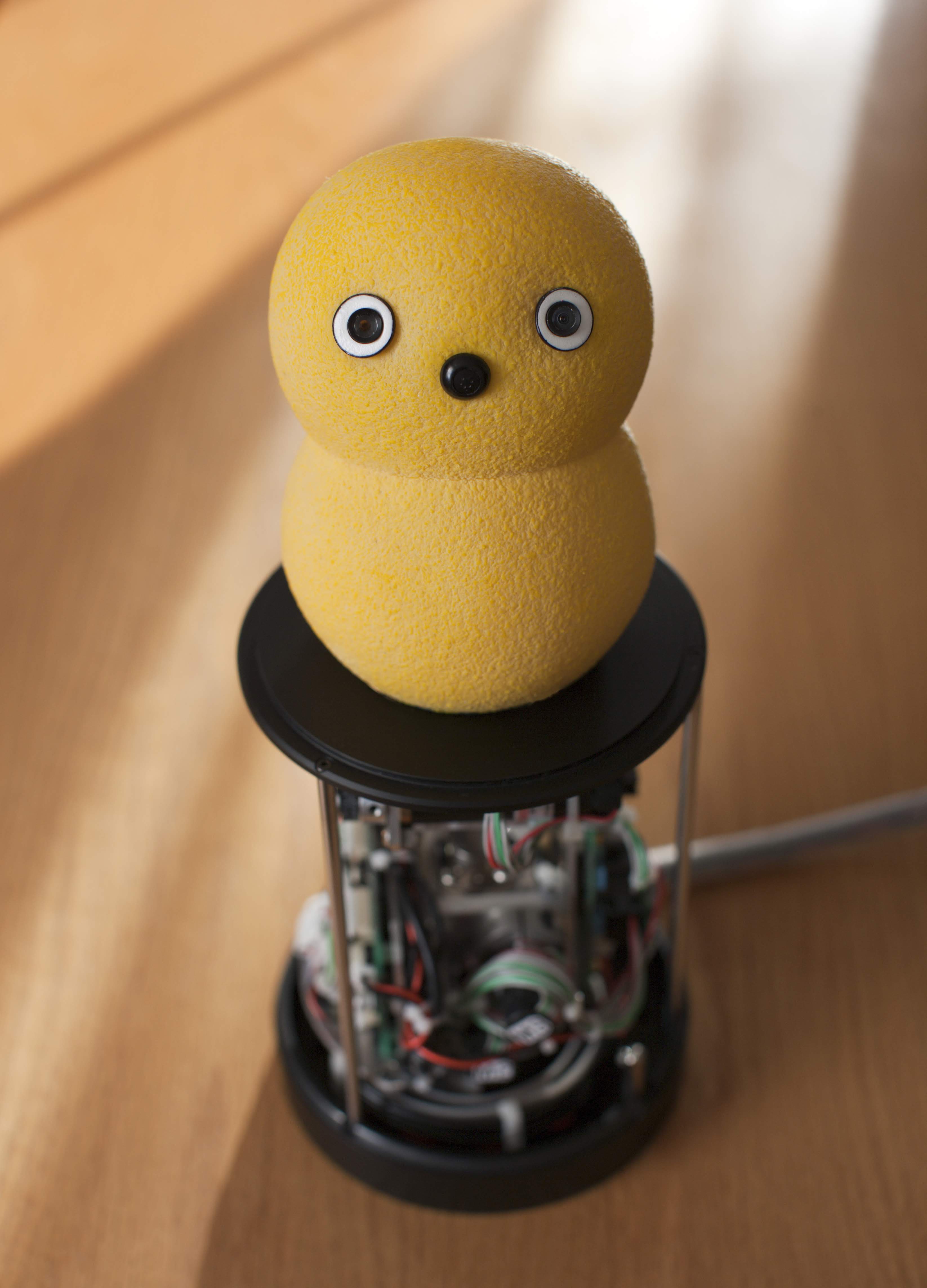

Image Credits: BeatBots LLC / Hideki Kozima / Marek Michalowski

Keepon groovin’

It’s an entirely unremarkable video in a number of ways. A small, yellow robot – two tennis balls formed into an unfinished snowman. Its face is boiled down to near abstraction: two widely spaced eyes stretched above a black button nose. The background is a dead gray, the kind they use to upholster cubicles.

“I Turn My Camera On: It’s the third track on Spoon’s fifth album, Gimme Fiction, released two years prior – practically 10 months to the day after YouTube went live. It’s the Austin-based indie band’s stripped down take on Prince-style funk – an perfect little number that could get anyone dancing, be it human or robot. For just over three-and-a-half minutes, Keepon grooves in a hypnotic rhythmic bouncing.

It was the perfect video for the 2007 internet, and the shiny new video site, roughly half a year after being acquired by Google for $1.65 billion. The original upload is still live, having racked up 3.6 million views over its lifetime.

A significantly higher budget follow up commissioned by Wired did quite well the following year, with 2.1 million views under its belt. This time, Keepon’s dance moves enticed passersby on the streets of Tokyo, with Spoon members making silent cameos throughout.

In 2013, the robot’s makers released a $40 commercial version of the research robot under the name My Keepon. A year later, the internet trail runs cold. Beatbots, the company behind the consumer model, posted a few more robots and then silence. I know all of this because I found myself down this very specific rabbit hole the other week. I will tell you that, as of the writing of this, you can still pick up a secondhand model for cheap on eBay – something I’ve been extremely tempted to do for a few weeks now.

I had spoken with cofounder Marek Michalowski a handful of times during my PCMag and Engadget days, but we hadn’t talked since the Keepon salad days. Surely, he must still be doing interesting things in robotics. The short answer is: yes. Coincidentally, in light of last week’s Google-heavy edition of Actuator, it turns out he’s currently working as a product manager at Alphabet X.

I didn’t realize it when I was writing last week’s issue, but his story turns out to be a great little microcosm of what’s been happening under the Alphabet umbrella since the whole robot startup shopping spree didn’t go as planned. Here’s the whole Keepon arc in his words.

Q&A with Marek Michalowski

Let’s start with Keepon’s origin story.

I was working on my PhD in human robot interaction at Carnegie Mellon. I was interested in this idea of rhythmic synchrony and social interaction, something that social psychologists were discovering 50 years ago in video recorded interactions of people in normal situations. They were drawing out these charts of every little micro movement and change in direction and accent in the speech and finding that there are these rhythms that are in sync within a particular person — but then also between people. The frequency of nodding and gesturing in a smooth interaction ends up being something like a dance. The other side of it is that when those rhythms are kind of unhealthy or out of sync, that that might be indicative of some problem in the interaction.

You were looking at how we can use robots to study social interaction, or how robots can interact with people in a more natural way?

Psychologists have observed something happening we don’t really understand — the mechanisms. Their robots can both be a tool for us to experiment and better understand those those social rhythmic phenomena. And also in the engineering problem of building better interactive robots, those kinds of rhythmic capabilities might be an important part of that. There’s both the science question that could be answered with the help of robots, but also the engineering problem of making better robots that would benefit from an answer to that question.

The more you know about the science, the more you’re able to put that into a robot.

Into the engineering. Basically, that was high level interest. I was trying to figure out what’s a good robotic medium for testing that. During that PhD, I was doing sponsored research trips to Japan, and I met this gentleman named Hideki Kozima, who had been a former colleague of one of one of my mentors, Brian Scassellati. They had been at MIT together working on the Cog and Kismet projects. I visited Dr. Kozima, who had just recently designed and built the first versions of Keepon. He had originally been designing humanoid robots, and also had psychology research interests that he was pursuing through those robots. He had been setting up some interactions between this humanoid and children, and he noticed this was not a good foundation for kind of naturalistic, comfortable social interactions. They’re focusing on the moving parts and the complexity.

Keepon was the first robot I recall seeing with potential applications for Autism treatment. I’ve been reading a bit on ASD recently, and one of the indicators specialists look for is a lack of sustained eye contact and an inability to maintain the rhythm of conversation. With the other robot, the issue was that the kids were focused on the visible moving parts, instead of the yes.

That’s right. With Keepon, the whole mechanism is hidden away, and it’s designed to really draw attention to those eyes, which are cameras. The nose is a microphone, and the use case here was for a researcher or therapists to be able to essentially puppeteer this robot, from a distance in the next room. Over the long term, they could observe how different children are engaging with this toy, and how those relationships develop over time.

There were two Spoon videos. The first was “I Turn My Camera On.”

I sent it to some friends, and they were like, “this is hilarious. You should put it on YouTube. YouTube was new. This was this was I think, March 2007. I actually wrote to the band’s management, and said, “I’m doing this research. I used your song in this video. Is it okay if I put it up on YouTube?” The manager wrote back, like, “oh, you know, let me let me check with [Britt Daniel]. They wrote back, “nobody ever asks, thanks for asking. Go ahead and do it.”

It was the wild west back then.

It’s amazing that that video is, is still there snd still racking up views, but with a week, it was on the front page of YouTube. I think it was a link from Boing Boing, and from there, we had a lot of incoming interest from Wired Magazine. They set set up the subsequent video that we did with withe band in Tokyo. On the basis of those kinds of 15 minutes of fame, there was a lot of there was inbound interest from other researchers at various institutions and universities around the world who were asking, “Hey, can I get one of these robots and do some research with it?” There was also some interest from toy companies, so Dr. Kozima and I started Beatbots as a way of making some more of these research robots, and then to license the Keepon IP.

[…]I was looking to relocate myself to San Francisco, and I had learned about this company called Bot and Dolly — I think I think it was from a little half page ad in Wired Magazine. They were using robots in entertainment in a very different way, which is on film sets to hold cameras and lights and do the motion control.

They did effects for Gravity.

Yes, exactly. They were actually in the midst of doing that project. That was a really exciting and compelling use of these robots that were designed for automotive manufacturing. I reached out to them, and their studio was this amazing place filled with robots. They let me rent room in the corner to do Beatbots stuff, and then co-invest in a machine shop that they wanted to build. I set up shop there, and over the next couple of years I became really interested in the kinds of things they were doing. At the same time, we were doing a lot of these projects, which we were talking with various toy companies about. Those are on the Beatbots website. […]You can do a lot when you’re building one research robot. You can craft it by hand and money is no object. You can buy kind of the best motors and so forth. It’s a very different thing to put something in a toy store and the retail price is roughly four times the like bill of materials.

Image Credits: BeatBots LLC / Hideki Kozima / Marek Michalowski

The more you scale, the cheaper the components get, but it’s incredible hard to hit a $40 price point with a first-gen hardware project.

With mass commercial products, that’s the challenge of how can you reduce the number of motors and what tricks can you can you do to make any given degree of freedom serve multiple purposes. We learned a lot, but also ran into physics and economics challenges.

[…]I needed to decide, do I want to push on the boundaries of robotics by making these things as inexpensively as possible? Or would I rather be in a place where you can use the best available tools and resources? That was a question I faced, but it was sort of answered for me the opportunities that were coming up with the things that Bot and Dolly was doing.

Google acquired Bot and Dolly with eight or so other robotics companies, including Boston Dynamics.

I took that up. That’s when the Beatbots thing was put on ice. I’ve been working on Google robotics efforts for — I guess it’s coming on nine years now. It’s been really exciting. I should say that Dr. Kozima is still working on Keepon in these in these research contexts. He’s a professor at Tohoku University.

News

Image Credits: 6 River Systems (opens in a new window) under a license.

Hands down the biggest robotics news of this week arrived at the end of last week. After announced a massive 20% cut to its 11,600-person staff, Shopify announced that it was selling of its Shopify Logistics division to Flexport. Soon after, word got out that it had also sold of 6 River Systems to Ocado, a U.K. licenser of grocery technology.

I happened to speak to 6 River Systems cofounder Jerome Dubois about how the initial Shopify/6 River deal was different that Amazon’s Kiva purchase. Specifically, the startup made its new owner agree to continue selling the technology to third parties, rather than monopolizing it for its own 3PL needs. Hopefully the Ocada deal plays out similarly.

“We are delighted to welcome new colleagues to the Ocado family. 6 River Systems brings exciting new IP and possibilities to the wider Ocado technology estate, as well as valuable commercial and R&D expertise in non-grocery retail segments,” Ocado CEO James Matthews said in a release. “Chuck robots are currently deployed in over 100 warehouses worldwide, with more than 70 customers. We’re looking forward to supporting 6 River Systems to build on these and new relationships in the years to come.”

Image Credits: Locus Robotics

On a very related note, DHL this week announced that it will deploy another 5,000 Locus robotics systems in its warehouses. The two companies have been working together for a bit, and the logistics giant is clearly quite pleased with how things have been going. DHL has been fairly forward thinking warehouse automation, including the first major purchase of Boston Dynamics’ trucking unloading robot, Stretch.

Locus remains the biggest player in the space, while managing to remain independent, unlike its larges competitor, 6 River. CEO Rick Faulk recently told me that the company is planning an immanent IPO, once market forces calm down.

A sorter machine from AMP Robotics.

Recycling robotics heavyweight AMP Robotics this weekend announced a new investment from Microsoft’s Climate Fund, pushing its $91 million Series C up to $99 million. There has always been buzz around the role of robotics could/should have in addressing climate change. The Denver-based firm is one of the startups tackling the issue head-on. It’s also a prime example of the “dirty” part of the three robotic Ds.

“The capital is helping us scale our operations, including deploying technology solutions to retrofit existing recycling infrastructure and expanding new infrastructure based on our application of AI-powered automation,” founder and CEO Matanya Horowitz told TechCrunch this week.

Image Credits: Amazon

Business insider has the scoop on an upcoming version of Amazon’s home robot, Astro. We’ve known for a while that the company is really banking on the product’s success. It seems like a longshot, given the checkered history of companies attempting to break into the home robotics market. iRobot is the obvious exception. Not much update on that deal, but last we hard about a month or so ago is that regulatory concerns have a decent shot at sidelining the whole thing.

Astro is an interesting product that is currently hampered by pricing and an unconvincing feature set. It’s going to take a lot more than what’s currently on offer to change the tide in home robots. We do know that Amazon is currently investing a ton into catching up with the likes of Chat GPT and Google on the generative AI front. Certainly, a marriage of the two makes sense. It’s easy to see how conversational AI could go a long way in a product like Astro, whose speech capabilities are currently limited.

Robot Jobs for Human People

Agility Robotics (20+ Roles)

ANYbotics (20+ Roles)

AWL Automation (29 Roles)

Bear Robotics (4 Roles)

Canvas Construction (1 Role)

Dexterity (34 Roles)

Formic (8 Roles)

Keybotic (2 Roles)

Neubility (20 Roles)

OTTO Motors (23 Roles)

Prime Robotics (4 Roles)

Sanctuary AI (13 Roles)

Viam (4 Roles)

Woven by Toyota (3 Roles)

Image Credits: Bryce Durbin/TechCrunch